Understanding and Managing Food Safety Risks

Over the course of the past 20 years, we have seen the emergence of risk analysis as the foundation for developing food safety systems and policies. This period has witnessed a gradual shift from a “hazards-based approach” to food safety (i.e., the mere presence of a hazard in a food was deemed unsafe) to a “risk-based approach” (determination whether the exposure to a hazard has a meaningful impact on public health). It is now common to see industry, government and consumers all call for the adoption of risk-based programs to manage chemical, microbiological and physical risks associated with the production, processing, distribution, marketing and consumption of foods. However, there is not always a clear understanding about what risk management encompasses or about the consequences of developing risk management systems. Accordingly, it is worth exploring some of the food safety risk management concepts and principles that are emerging both nationally and internationally and are being used to establish risk management systems that are likely to dramatically transform the food safety landscape in the next 10 years.

?The use of risk management concepts is not new. In fact, many of the much-discussed differences between hazard- and risk-based approaches disappear when examined more closely. If one carefully examines most traditional hazards-based food safety systems, at their core there are one or more key risk-related assumptions that drive the decision-making process. These risk-based decisions may not be made consciously but nevertheless effectively introduce decision criteria related to relative risk. The most common is the establishment of standard methods of analysis for verifying that a food system is controlling a specific hazard. Through the selection of the analytical method, the sampling plan and the frequency of testing, a risk-based decision (i.e., a decision based on the frequency and severity of the adverse effects of a hazard) is introduced. Typically, if a hazard occurs frequently and/or has a severe consequence, the frequency and sensitivity of analytical testing are made more stringent than if the hazard is infrequent and/or has mild adverse effects. For example, traditional sampling plans for powdered infant formula generally call for testing each lot for Salmonella enterica at a level of absence in 1.5 kg (sixty 25-g samples) versus the sampling of refrigerated ready-to-eat foods for Staphylococcus aureus which is done only infrequently and typically allows the microorganism to be present at levels between 100 to 1,000 CFU/g. Obviously, while these two microorganisms are both food safety hazards, risk-based decisions are made related to the stringency needed control the hazard. Thus, the actual difference between hazard- and risk-based systems is often more about how uncertainty and transparency are dealt with during the decision-making process.

How Do I Manage Risk?

Managing food safety risks is like playing in a Texas hold ’em tournament (a hobby of mine). In poker, you can get lucky in the short run, but unless you know the odds of each individual hand, you are unlikely to be successful in the long run. The same is true with food safety. Manufacturers must understand their “odds” of producing a safe product and modify what “hands” they are going to play if they don’t think they have a winning strategy. The same is true for consumers when they prepare meals at home. They need to understand the “odds” that their purchasing, handling and preparation practices could lead them to introduce a food safety hazard and then modify their practices to improve their “odds.” It is the knowledgeable “player” who is going to be the winner in the long run. This is the advantage that risk management approaches bring to food safety: they increase the amount of knowledge mobilized to make better food safety decisions.

Much of the conceptual thinking on risk management systems has been taking place at the international level through intergovernmental organizations such as the Codex Alimentarius Commission (CAC) and the World Organization for Animal Health (OIE). This is not surprising since these organizations are involved in setting standards for foods and animals, respectively, in international trade. With the diversity of approaches to food production and manufacturing worldwide, it was critical for CAC to establish general principles and concepts related to food safety risk management decision making that could lead to harmonized international standards for the wide range of foods and potential hazards. The international sharing of ideas has been highly beneficial in identifying the critical role that risk assessment techniques are likely to play in the future to help government and industry translate public health goals into meaningful risk management programs. Some of the critical CAC documents related to the adoption of risk management approaches are the following:

• “Working Principles for Risk Analysis for Food Safety for Application by Governments”[1]

• “Working Principles for Risk Analysis Application in the Framework of the Codex Alimentarius”[2]

• “Principles and Guidelines for the Conduct of Microbiological Risk Assessments”[3]

• “Principles and Guidelines for the Conduct of Microbiological Risk Management (MRM)”[4]

While they are not always the most exciting documents, they are essential for anyone involved in developing food safety risk management systems.

What Tools Are Available?

One of the risk management tools that has helped facilitate the move to quantitative risk management has been the emerging concepts related to Food Safety Objectives (FSOs) – Performance Objectives (POs) that were introduced by the International Commission on Microbiological Specifications for Foods[5] and then elaborated by a series of FAO/WHO expert consultations and the CAC Committee on Food Hygiene.[4] These tools and concepts are dramatically changing the way that more traditional risk management metrics are being developed. The key to this approach is to use risk-modeling techniques to relate levels of exposure to the extent of public health consequences that are likely to occur. This information is then used to determine the levels of hazard control that need to be achieved at specific points along the food chain. This approach has the distinct advantage of including a clearly articulated level of control based on risk and flexibility in terms of the strategies and technologies that can be used to control foodborne hazards. It also provides a means of comparing the effectiveness and levels of control that can be achieved by focusing prevention/intervention efforts at different points along the food chain. For example, this approach is beginning to evaluate questions such as whether fresh-cut produce manufacturers would be better served by emphasizing on-farm prevention or post-harvest interventions. The use of risk management metrics concepts is also having a significant impact on the way that microbiological criteria are being developed. Two key examples are the CAC microbiological criteria developed recently for (i) powdered infant formula[6] and (ii) Listeria monocytogenes in ready-to-eat foods.[7]

How Do We Talk About Risk?

As government agencies and industry begin to adopt risk management approaches, the increased transparency of the process is producing a number of communication challenges. It is important to emphasize that this approach does not mean that there is a decrease in the level of protection afforded the consumer. Instead, it reflects the communication challenges related to explaining scientific concepts, describing the concepts of variability and uncertainty and explaining why food safety decisions cannot be based on “zero risk.” For example, let’s consider the tool most commonly used to make decisions about the safety of toxic chemicals in foods. This has been done traditionally by determining the toxicity of a compound in one or more animal models to identify its NOEL (no observed effect level) values. The NOEL value obtained for the most sensitive animal model is then used to calculate “safe levels” of the toxicant after applying two 10-fold safety factors: an uncertainty factor for the potential differences between the animal model and humans and a variability factor for the potential diversity in sensitivity among humans. The calculated value is then used in conjunction with estimates of likely consumption to determine if there is a likelihood of adverse responses in humans. Thus, what is generally perceived by the public as an absolute distinction between safe and unsafe doses is a much more nuanced decision that weighs population susceptibility, likely levels of exposure and status of our knowledge about the toxicant mechanism of action.

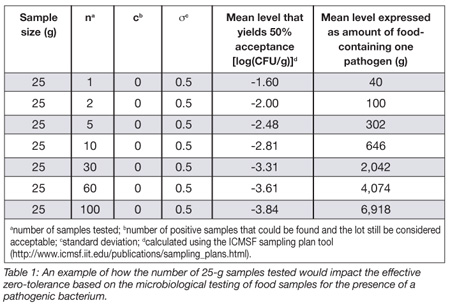

Similar risk communication challenges are associated with pathogenic foodborne microorganisms that cause infectious or toxicoinfectious diseases. One of the most poorly understood is the use of microbial testing against a “zero tolerance” standard.[8] Zero tolerance is used as a means of expressing a level of concern. However, verification of a zero tolerance standard requires that the standard be “operationalized” by specifying a standard sampling and testing protocol; that is, if a lot of food tested against the standard protocol is found negative, it is assumed that this lot is free of the microbiological hazard. The dilemma is that as soon as a zero tolerance standard is operationalized, it is no longer a zero tolerance. Instead, it becomes either a non-transparent, method-based threshold based on sensitivity of the testing protocol or a non-transparent biologically based threshold based on the presumed ability of the microorganism to cause disease. As an example, Table 1 depicts the relative stringency of a zero tolerance standard depending on the number of 25-g samples required to be analyzed. While these are all zero tolerances, they differ in their stringency by as much as 170-fold. In a fully transparent risk management program, zero tolerance standards would be replaced with the actual performance of the sampling program. However, this would eliminate the simple though not necessarily accurate separation of products that do or do not have a pathogenic microorganism.

Similar risk communication challenges are associated with pathogenic foodborne microorganisms that cause infectious or toxicoinfectious diseases. One of the most poorly understood is the use of microbial testing against a “zero tolerance” standard.[8] Zero tolerance is used as a means of expressing a level of concern. However, verification of a zero tolerance standard requires that the standard be “operationalized” by specifying a standard sampling and testing protocol; that is, if a lot of food tested against the standard protocol is found negative, it is assumed that this lot is free of the microbiological hazard. The dilemma is that as soon as a zero tolerance standard is operationalized, it is no longer a zero tolerance. Instead, it becomes either a non-transparent, method-based threshold based on sensitivity of the testing protocol or a non-transparent biologically based threshold based on the presumed ability of the microorganism to cause disease. As an example, Table 1 depicts the relative stringency of a zero tolerance standard depending on the number of 25-g samples required to be analyzed. While these are all zero tolerances, they differ in their stringency by as much as 170-fold. In a fully transparent risk management program, zero tolerance standards would be replaced with the actual performance of the sampling program. However, this would eliminate the simple though not necessarily accurate separation of products that do or do not have a pathogenic microorganism.

Another related communication challenge is describing the effectiveness of intervention technologies as a means of managing food safety risks. As before, the industry and government agencies want to be able to provide a high degree of assurance to consumers that a product is safe but are faced with the fact that the processing technologies do not provide absolute elimination of hazards. As an example, consider the use of pasteurization to eliminate a vegetative pathogenic bacterium such as Salmonella. To be considered a pasteurization step, the inactivation technology must be capable of providing a 5- to 7-log inactivation, that is, a 100,000- to 10,000,000-fold reduction in the level of the target microorganism. Thus, if we had 100-g serving of a food that contained one Salmonella per g and subjected the product to a 5-log inactivation, then one would assume that Salmonella was eliminated. However, microbial inactivation is probabilistic in nature and process calculations take into account “partial survivors.” Therefore, in the above scenario, traditional first-order kinetics would predict that after the treatment approximately 1 serving in 1,000 would have a viable Salmonella cell. It is important to note that this is still a 100,000 reduction in relative risk.

A corollary to this scenario is that it is equally difficult to communicate that if a 5-log treatment is an acceptable level of control for a food safety system, the detection of positive Salmonella at a rate of 1 per 1,000 servings would not indicate that the system was out of control. This is why most food processors increase the stringency of their inactivation treatments by 2 to 3 orders of magnitude to further reduce the risk that a positive serving occurs during verification testing. It is worth noting that process control verification testing is based on detecting when the level of defective servings exceeds what would be expected when the system is functioning as designed.

What Are Some Additional Challenges?

In addition to daunting communication challenges, companies and government agencies are finding additional challenges as they embrace a risk management approach. A critical one is the “informatics” capability of the food industry. Food safety risk management systems thrive on real-time access to information that measures and integrates the performance of the various factors that contribute to food safety. This information typically involves multiple products and processes that utilize ingredients gathered from around the world and cross multiple industry sectors and national boundaries. The informatics systems needed to optimize food safety risk management decision making generally exceed the capabilities of major corporations and will be a significant challenge to small- and medium-sized companies and many national governments.

A related challenge is how to analyze the information once it has been collected. Traditionally, food safety systems have looked at individual steps along the food chain, treating each step in isolation from the others. However, as the systems become more complex, the interactions and synergies between the components become increasingly important and decrease the utility of simple analyses of individual steps. It is not surprising that as food companies and national governments adopt risk management approaches, they are increasingly turning to “system thinking approaches.” Used extensively in a variety of disciplines, the basic tenet of systems thinking is that when a high level of complexity is reached, the impact of individual steps cannot be analyzed without considering the entire system. This leads to the expansion of process simulation modeling applications used for food safety risk assessment so that they can be adapted for risk management purposes.

One of the effects that increased use of risk modeling is having is a refocusing of where prevention and intervention programs are being applied along the food chain. Traditionally, food safety programs have focused on an “hour-glass” model where a large number of food production facilities (i.e., farms) send their crops to a limited number of food processing facilities that, in turn, distribute their finished products to a large number of retail markets. Thus, for traditional inspection activities, the simplest point upon which to focus is the processing facilities. However, consideration of the risk factors associated with the microbiological and chemical safety of produce and other foods is demonstrating that options for risk mitigation may be more effectively implemented at the farm or retail level. This is particularly true for hazards that have multiple pathways leading to adverse public health impacts. As an example, consider a complete risk assessment for Escherchia coli O157:H7 and other enterotoxigenic, Shiga toxin-producing E. coli. The traditional focus has been on ground beef at meat slaughter and processing facilities. However, if the other vehicles associated with this group of pathogenic E. coli are considered, that is, fresh produce, water, contact with animals and dairy products, the nexus for these additional risk factors appears to be the farm environment. Potentially, the development of risk management programs at this point in the food chain would help reduce risks in all of the various risk pathways. As another example at the other end of the food chain, any assessment of the risks of foodborne norovirus infections clearly point to implementing risk management controls at the retail and foodservice segments.

What About HACCP?

No discussion of food safety risk management would be complete without considering Hazard Analysis and Critical Control Points (HACCP), the risk management system most widely used with foods. Despite the fact that HACCP has been almost universally adopted, there has been surprisingly little discussion about many of the concepts underpinning HACCP and how it fits into a risk management framework. In many ways, HACCP has suffered for being ahead of its time. HACCP articulated many of the principles associated with systems thinking long before systems thinking was formalized as a problem-solving approach. HACCP is clearly a “semi-quantitative” risk management system that is based on a largely qualitative assessment of hazards. The difference between an HACCP hazard assessment and a risk assessment has long been discussed and remains somewhat controversial. It is clear that selection of “significant hazards” involves consideration of the likelihood and severity of a hazard, implying a risk evaluation. However the “risk decisions” reached in developing a HACCP plan are often non-transparent and not fully supported by an adequate assessment. HACCP has largely remained unchanged in its approach over the last 40 years. This may, in part, reflect its adoption for regulatory purposes. While HACCP has clearly been a beneficial regulatory tool for bringing a uniformity of approach to food safety, it has also had the unintended consequence of discouraging the evolution of HACCP as a risk management system.

With the emergence of risk management metrics, there is substantial interest on the part of a number of scientists in identifying how FSOs and POs can be used to help convert HACCP into a risk-based food safety system. It is clear that being able to more directly tie the stringency of an HACCP system to public health outcomes would be highly beneficial to harmonizing both national and international standards and would lead to more transparent food safety decision making. In particular, it would help address some of the longstanding limitations associated with HACCP. For example, the establishment of critical limits is an area that has eluded sound guidance, in part, because there have been insufficient means for relating the level of stringency required at individual critical control points to expected food safety outcomes.

The inclusion of risk management and risk assessment concepts, techniques and tools into HACCP may also help dispel some of the misconceptions about HACCP. For example, there is a general impression that HACCP cannot be applied to food systems where there is not a clear intervention step that eliminates the hazard. Likewise, there have been questions about whether HACCP can be used effectively in the farm and retail segments of the food chain, or whether it can be effectively applied to food defense issues. However, as a systems approach to managing food safety risks, HACCP should be viewed relative to its general framework, that is, managing food safety risks by gathering knowledge about the hazards associated with a food, determining the risk that these hazards represent, developing risk mitigations that control the hazards to the degree required and developing metrics that assess whether the required level of control is being achieved. With new decision tools that are being developed and applied to food safety issues, it should be feasible to greatly enhance HACCP systems. With the power of software applications, it should be possible to make these tools available in user-friendly formats suitable for all segments of the food industry.

What Have We Learned?

In summary, the management of food safety risks has been undergoing a quiet revolution as it adopts risk analysis approaches. This is stimulating a dramatic shift from qualitative, often non-transparent decision criteria to quantitative, fully transparent consideration of the science underlying food safety decisions. However, this evolution in thinking is not without it challenges, particularly in communications, informatics and availability of adequately educated specialists and consumers alike. There is no question these advances in risk management have the potential for enhancing the scientific basis of our food safety systems and provide a means for truly employing a transparent, risk-based approach in the development of food safety policies. However, what is transparent to risk assessors and risk managers who have had the advantage of advanced training in these techniques and concepts is often completely non-transparent to most of the non-technical stakeholders. Without a concerted effort to educate the operators of small- to medium-sized companies, as well as the agricultural, foodservice and retail sectors, consumers and developing nations, this evolution to a more sophisticated decision making is likely to meet increased resistance. This can be summed up nicely by paraphrasing a question posed by a fellow speaker at a conference I attended a couple of years ago in Singapore, “How do you expect us to adopt risk analysis when we are still trying to figure out how to use HACCP?” It is critical that the food safety community invest in enhanced communication and education programs and “user-friendly” tools so companies and national governments can readily reap the advantages of these new approaches and efforts to harmonize regulatory policies so they can be consistent with a risk analysis approach to managing food safety hazards. The future of risk analysis approaches to food safety is bright, but only if we nurture its growth.

Robert L. Buchanan, Ph.D., Director of the University of Maryland’s Center for Food Safety and Security Systems, received his B.S., M.S., M.Phil. and Ph.D. degrees in Food Science from Rutgers University and post-doctoral training in mycotoxicology at the University of Georgia. Since then he has 30+ years experience teaching, conducting research in food safety and working at the interface between science and public health policy, first in academia, then in government service in both USDA and FDA and, most recently, at the University of Maryland. His scientific interests are diverse, and include extensive experience in predictive microbiology, quantitative microbial risk assessment, microbial physiology, mycotoxicology and HACCP systems. He has published widely on a range of subjects related to food safety and is one of the co-developers of the widely used USDA Pathogen Modeling Program. Dr. Buchanan has served on numerous national and international advisory bodies including as a member of the International Commission on Microbiological Specification for Foods, as a six-term member of the National Advisory Committee for Microbiological Criteria for Foods and as the U.S. Delegate to the Codex Alimentarius Committee on Food Hygiene for a decade.

References

1. Codex Alimentarius Commission. 2007. Working Principles for Risk Analysis for Food Safety for Application by Governments. (CAC/GL 62-2007).

2. Codex Alimentarius Commission. 2007. Working Principles for Risk Analysis Application in the Framework of the Codex Alimentarius. (CAC/GL 62-2007).

3. Codex Alimentarius Commission. 1999. Principles and Guidelines for the Conduct of Microbiological Risk Assessments. (CAC/GL 30-1999).

4. Codex Alimentarius Commission. 2007. Principles and Guidelines for the Conduct of Microbiological Risk Management (MRM). (CAC/GL 63-2007).

5. International Commission on Microbiological Specifications for Foods. 2002. Microorganisms in Foods 7: Microbiological Testing in Food Safety Management. Kluwer Academic/Plenum Publishers: New York.

6. Codex Alimentarius Commission. 2008. Code of Hygienic Practice for Powdered Formulae for Infants and Young Children. (CAC/RCP 66-2008).

7. Codex Alimentarius Commision. 2007. Guidelines on the Application of General Principles of Food Hygiene to the Control of Listeria monocytogenes in Foods. (CAC/GL 61-2007).

8. Buchanan, R. L. 2010. “Bridging Consumers’ Right to Know and Food Safety Regulations Based on Risk Assessment.” In Risk Assessment of Foods, C.-H. Lee, ed. KAST Press: Korea; pp. 225-234.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!