Food Microbiology in Focus, An Expert Roundtable, Part 1

In August, Food Safety Magazine Editorial Director Julie Larson Bricher had the opportunity to speak with several recognized experts from industry, research and government to discuss hot topics in food microbiology. This roundtable-in-print article is based on part of that discussion, which was co-moderated with Rich St. Clair, Industrial Market Manager with Remel, Inc., and took place at the International Association for Food Protection’s annual meeting in Calgary, Canada.

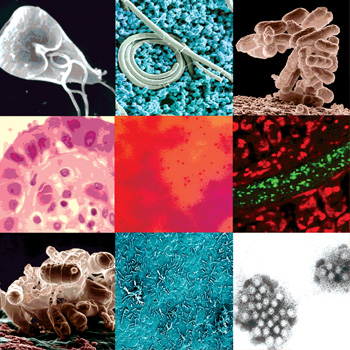

In Part 1, the panelists discuss the top microorganisms of concern to the food industry, public health risk assessment issues, and provide some insights into significant advances in test methods and tools available to the food supply chain today.

In Part 2, to be published in the December/January 2007 issue of Food Safety Magazine, the discussion continues on the topic of challenges associated with the adoption and application of advanced food microbiology methods and tools in the food industry, and how the food industry can strategize to meet these challenges and realize the benefits of traditional, new and hybrid techniques.

The Panelist:

J. Stan Bailey, Ph.D., is a research microbiologist with the Russell Research Center, Agricultural Research Service, U.S. Department of Agriculture, in Athens, GA, where is responsible for research directed toward controlling and reducing contamination of poultry meat products by foodborne pathogens such as Salmonella and Listeria. Bailey has authored or coauthored more than 400 scientific publications in the area of food microbiology, concentrating on controlling Salmonella in poultry production and processing, Salmonella methodology, Listeria methodology, and rapid methods of identification. He is currently vice president of the executive board of the International Association for Food Protection.

J. Stan Bailey, Ph.D., is a research microbiologist with the Russell Research Center, Agricultural Research Service, U.S. Department of Agriculture, in Athens, GA, where is responsible for research directed toward controlling and reducing contamination of poultry meat products by foodborne pathogens such as Salmonella and Listeria. Bailey has authored or coauthored more than 400 scientific publications in the area of food microbiology, concentrating on controlling Salmonella in poultry production and processing, Salmonella methodology, Listeria methodology, and rapid methods of identification. He is currently vice president of the executive board of the International Association for Food Protection.

Mark Carter is General Manager of Research with the Silliker Inc. Food Science Center in South Holland, IL. He is a registered clinical and public health microbiologist with the American Academy of Microbiologists and chair-elect of the American Society for Microbiology’s Food Microbiology Division. Prior to joining Silliker in 2005, he served as a Section Manager for Microbiology and Food Safety for Kraft Foods North America where he was responsible for the Dairy, Meals, Meat, Food Service and Enhancer product sectors.

Mark Carter is General Manager of Research with the Silliker Inc. Food Science Center in South Holland, IL. He is a registered clinical and public health microbiologist with the American Academy of Microbiologists and chair-elect of the American Society for Microbiology’s Food Microbiology Division. Prior to joining Silliker in 2005, he served as a Section Manager for Microbiology and Food Safety for Kraft Foods North America where he was responsible for the Dairy, Meals, Meat, Food Service and Enhancer product sectors.

Martin Wiedmann, Ph.D., Associate Professor, Department of Food Science, Cornell University, is a world-recognized scholar, researcher and expert on critical food safety issues affecting the dairy/animal industry.Wiedmann addresses farm to fork food safety issues with a diverse educational background in animal science, food science, and veterinary medicine. His work with Listeria monocytogenes is internationally recognized and has significantly contributed to improving our understanding of the transmission of this organism along the food chain.

Martin Wiedmann, Ph.D., Associate Professor, Department of Food Science, Cornell University, is a world-recognized scholar, researcher and expert on critical food safety issues affecting the dairy/animal industry.Wiedmann addresses farm to fork food safety issues with a diverse educational background in animal science, food science, and veterinary medicine. His work with Listeria monocytogenes is internationally recognized and has significantly contributed to improving our understanding of the transmission of this organism along the food chain.

Margaret Hardin, Ph.D., is Director of Quality Assurance and Food Safety with Boar’s Head Provisions Co., the nationally known ready-to-eat meat and cheese processor serving the delicatessen and retail markets. Previously, Hardin held positions as Director of Food Safety at Smithfield Packing Co., Sara Lee Foods and the National Pork Producers Council, and as a research scientist and HACCP instructor with the National Food Processors Association in Washington, DC. Her efforts have been directed in areas of food safety, research, HACCP, and sanitation to protect the public health and assure the microbiological quality and safety of food.

Joseph Odumeru, Ph.D., is the Laboratory Director, Regulatory Services, Laboratory Services Division, and Adjunct Professor, Department of Food Science, University of Guelph. He is responsible for food quality and safety testing services provided by the division. His research interests include development of rapid methods for the detection, enumeration and identification of microorganisms in food, water and environmental samples, molecular methods for tracking microbial contaminants in foods, automated methods for microbial identification, shelf life studies of foods and predictive microbiology. His research publications include 65 publications and review papers in peer review journals, 70 abstracts and presentations in scientific meetings.

Joseph Odumeru, Ph.D., is the Laboratory Director, Regulatory Services, Laboratory Services Division, and Adjunct Professor, Department of Food Science, University of Guelph. He is responsible for food quality and safety testing services provided by the division. His research interests include development of rapid methods for the detection, enumeration and identification of microorganisms in food, water and environmental samples, molecular methods for tracking microbial contaminants in foods, automated methods for microbial identification, shelf life studies of foods and predictive microbiology. His research publications include 65 publications and review papers in peer review journals, 70 abstracts and presentations in scientific meetings.

Julian Cox, Ph.D., is Associate Professor, Food Microbiology in Food Science and Technology at the School of Chemical Sciences and Engineering, University of New South Wales, Sydney, Australia. He has taught for over a decade in the areas of foodborne pathogens, spoilage, quality assurance, rapid microbiological methods and communication skills. His research activities revolve around a range of foodborne pathogens, particularly Salmonella and Bacillus. He provides advice on food safety through organizations such as Biosecurity Australia and input into the development of Australian standards for microbiological testing of foods. He also sits on the editorial boards of Letters in Applied Microbiology, the Journal of Applied Microbiology and the International Journal of Food Microbiology.

Julian Cox, Ph.D., is Associate Professor, Food Microbiology in Food Science and Technology at the School of Chemical Sciences and Engineering, University of New South Wales, Sydney, Australia. He has taught for over a decade in the areas of foodborne pathogens, spoilage, quality assurance, rapid microbiological methods and communication skills. His research activities revolve around a range of foodborne pathogens, particularly Salmonella and Bacillus. He provides advice on food safety through organizations such as Biosecurity Australia and input into the development of Australian standards for microbiological testing of foods. He also sits on the editorial boards of Letters in Applied Microbiology, the Journal of Applied Microbiology and the International Journal of Food Microbiology.

Food Safety Magazine: What do you consider to be the top microorganisms of concern today to food supply chain companies, and why? What organisms are on the horizon that industry should better understand?

Julian Cox: I think Salmonella is going to continue to be one of the top bacterial pathogens, although I think that U.S. epidemiological data is suggesting this pathogen is certainly on the decline since the mid- to late 1980s and early 1990s. I believe viruses are going to be increasingly important. There are a number of reasons that these are of concern to the food industry, including the fact that methods of detection are not reliable and the infectious dose is typically so low. I think as global trade continues to increase, we may see increasing problems with parasites, as well, both in terms of the ones we recognize as foodborne and new ones. That’s probably a good place to start.

Mark Carter: I agree with some of Julian’s points. I also think that an overarching problem we face in food microbiology today is that we have to identify organisms of concern by looking backwards, from an epidemiological standpoint. We don’t know what an organism of concern is until there’s a foodborne illness outbreak. A majority of foodborne illness right now isn’t diagnosed but the assumption is made that there is some organism that we already know is part of an unknown cause.

For example, there is pressure from the legislative side that says E. coli O157:H7 is what we should try to detect, even though we know there is a larger group of EHEC that are all infectious. Yet we focus and do all of our statistics on O157, its prevalence and where it is causing illness, but we don’t go back and say, “There is a whole wider group of organisms that cause problems.” So half of the issue with naming top pathogens of concern is we just don’t have a handle on what is causing the illness or we would be better able to make the assumption what organisms will be next.

Martin Wiedmann: I agree with Mark that E. coli O157:H7 is going to be a big issue and EHEC as a whole. By the latter, I mean that there are a lot of other pathogenic E. coli that we are completely ignoring right now. This is because, if we’re honest, we don’t even know how to define a pathogenic E. coli at this time. We know several genes and gene factors, but we don’t know how to define them.

I think the organisms we are completely ignoring in food microbiology are any that cause chronic disease. Right now, we only look at acute disease. If you eat something and get sick within two months, we’re going to look at Listeria as a possible cause. But anything that makes us sick long-term, such as cancer, chronic sequalae or arthritis, is difficult because we can’t do the epidemiology. Take Heliobacter pylori for example. Is it foodborne? Maybe not, but it’s definitely waterborne. We can’t link it to a food because you get sick two years later. What food was it? What pathogen was it? Is it slow-growing?

Joseph Odumeru: Yes, for quite awhile we’ve made E. coli O157:H7 the main focus but I think this has been the wrong approach from which to start because there are a lot of toxin-producing coli out there that are of concern to food producers. The business line of thought is that we cannot include all EHEC toxin-producing organisms as part of every food testing program. In fact, if you submit a sample to our lab and you want to look for only O57:H7, you are going to get more answers than you asking for. We are going to find all related organisms at once.

The other organism that I think is top of mind today in the food industry is Listeria monocytogenes because this organism is unique in its ability to survive in a variety of conditions and it is ubiquitous, found in soil and elsewhere in the natural environment. With zero tolerance regulation in place for this organism, Listeria will continue to be a big issue. Because of its ubiquitous nature, Listeria can contaminate food at the farm level, to processing, through the retail level. So it is a big concern because of its ability to contaminate foods even during processing in the plant environment and the focus on sanitation and control to eliminate the organism will remain a major focus.

Stan Bailey: I would add that food supply chain companies’ primary issues today center on spoilage organisms, particularly yeasts and molds. Pathogens such as E. coli, Salmonella and Campylobacter get a lot more publicity but the organisms that drive food companies crazy are the spoilage organisms that affect shelf life.

I agree that viruses are one of the issues on the horizon that I would be most concerned about. There certainly is increasing evidence that norovirus and hepatitis are more associated with foods than we knew in the past, and now we are dealing with avian influenza and other potential pandemic strains, which makes viruses of critical interest for food microbiologists.

Margaret Hardin: I agree on some level with what everyone is saying in that we only know what we know right now, and more often than not we don’t know what’s wrong until something goes wrong. As a result, at the industry level we spend an awful lot of time reacting to regulations, and I think that’s the immediate bottom line. But I think as we do a lot more international import and export of goods (and of people), food- and waterborne viruses are going to play a bigger role. Then we’ll need to look at the real bottom line of the impact of employee-to-employee infection or the transmission of these from employees to the foods they handle in terms of what do we do about it. A lot of times employees don’t want to say they are ill or you don’t know what their health status is when they come into the workplace.

I also think Listeria is going to stay at the forefront in terms of microorganisms of concern to the food industry, whether we like it or not. It affects the whole food chain, as Joe points out. I also agree that Salmonella is going to stay in focus because the industry and the regulators are looking at trends that show foodborne illness associated with Salmonella decreased, leveled out and now appears to be on the rise again. I think there is a lot more to learn about this organism to determine whether we can further reduce it with current intervention technology, either in production or through other methods.

This past year, we’ve struggled with determining the risk to product from avian influenza. When such viruses and other organisms emerge, it is important that we are able to determine what is the potential risk to the workers on the line, whether it’s in slaughter, whether it’s in processing, whether it’s in further processing or in handling the final product. It comes down to our methods: What are we looking for? Why are we looking for it? And before we start looking for it, what are we going to do with those results? Before I take an environmental swab or sample, we need to know what we are going to be able to do with those results. How are we going to act upon that data, and can we act upon that data?

Martin Wiedmann: We haven’t yet addressed the issue of multi-drug resistance, which is clearly on the horizon. Salmonella is already high up on the list, but I think we are going to see even drug-resistant non-pathogenic organisms coming to the forefront. If a food has any organism with resistance genes, those genes can be transmitted to a pathogen which causes a whole new set of issues for public health—and for detection because the resistance can move.

Similarly, I think Listeria is an important pathogen not only unto itself, but also as a group. These are opportunistic pathogens, and when we look at the host we can see that the host population is going to change. More and more foods are consumed by immunocompromised people. Are we going to produce different foods for highly immunocompromised people, those coming off cancer therapy or who have had an organ transplant, knowing that a new slew of organisms are going to show up as the host morphs—organisms that do not pose the same risk as the typical immuno-competent consumer? And that’s just one pathogen, but we can think of some others and many we don’t know as well. But that’s going to come up in the future, I think, for some foods.

Food Safety Magazine: Given the number of organisms for which we currently test, let’s talk about significant advances in food microbiological detection and test methods and tools.

Joseph Odumeru: At our lab, which conducts a lot of routine testing for regulatory agencies, industry and academia, we find that each client has different expectations when looking for a target organism. Industry wants fast results. Once we have the samples, they want us to deliver test results so they can get the product out. It’s not unusual for us to get the product samples in the morning, and that evening receive a call from the food company asking for the status of the testing and when they can expect results. So for industry, advances in rapid methods are very important.

On the other hand, the regulatory agencies typically require the use of a culture method, regardless of what type of screening method is used, and they have a good reason for doing this because cultural methods isolate the target organism and provide a high level of specificity. Of course, academia or research aims also focus on specificity. These clients are not as concerned with how quickly the lab can provide results, but rather, they want you to find the target organism. Often, if the lab doesn’t find the organism, it is usually the academic researchers who question why you didn’t and ask what method you used.

There are many advances that I see in the area of standard cultural methods that may address all of these expectations. In our lab, we routinely use immunomagnetic separation (IMS), for example. This technique has been around for quite awhile but we are beginning to see an increase in its usefulness because it allows very low level detection capability, down to a single organism. Before you do any further testing, whether it is by culture or PCR, you can combine IMS with any type of detection/screening method and significantly increase the recovery rate of the organism of interest. Our lab introduced this to the poultry industry in Ontario, Canada, a few years ago and we increased our recovery by 100%. This is with samples that come from the farm, full of microflora.

I would also mention that I use chromogenic media widely now, from fresh water testing to food to environmental samples.. Again, our lab combines the IMS technique with the use of chromogenic media so that we can increase detection at the higher level than ever before.

Stan Bailey: I think in terms of standard and manual methods, there is an actual understanding by more and more people about the need to properly handle samples on the front end to be able to statistically sample relevant numbers of samples, to be able to handle samples in such a way as to recover injured cells and in a way that you can distinguish or separate the target pathogen from the food. It doesn’t do you any good to have sophisticated detection tools if you don’t have a good sample taken on the front end.

Mark Carter: I agree with everything that has been said so far, but I’d like to take it back a step. I think there have been great advances made on the detection side. There are many companies in the industry doing good jobs in point detection, from developing chromogenic agars to several types of automated systems. But if you look at what’s coming down the road, I don’t think the food industry quite understands how to use methods like a microassay, or assays that strictly target certain proteins, proteomics, and so on, that are being used in chemistry now. Detection methods that are outside the realm of those normally used in food microbiology labs, such as spectroscopy, are starting to pop up in the literature. So, on the detection side we’re seeing huge advances used by other sciences that are finally going to flow over into food microbiology. Even so, we still lose sight of the fact that we don’t do a good job of preparing or culturing a sample to optimize the detection system. Like Stan is saying, you’ve also got to get a good sample in the first place.

There are a lot of manufacturers that have made some really good strides in tweaking media that have been used for 100 years. And if you look at the original purpose for the development of some of these media, you’ll find that today we don’t use them for the same purpose. Again, I think if you look at the whole process, our challenge in optimizing the advances in microbiological detection methods is understanding and getting better at sampling and sample preparation, as well as understanding the statistics and the probability of what you’re doing when you sample.

Julian Cox: Years ago people began to develop methods, and those have become increasingly “golden.” If you go back to the original literature you find that people had question marks over some of the media used, for example, yet somehow the methods get etched deeper and deeper into stone and become, by default or otherwise, reference methods. Even some of the current standard methods seem to me a little retrograde. We appear to have returned to some of the so-called tried, tested and true media, and they’re not necessarily the best.

When we look at coupling concentration technologies with traditional culture methods, even direct plating, my concern is with the increasing push to shorten the pre-detection phase, which is where recovery from injury is critical. Now we are seeing some spectacular failures in relation to single-step systems, simply because we are not taking into account the dynamics of recovery and growth. I agree with Mark that we have focused very heavily on how we actually ‘see’ the bug. But whether we are talking about a colony on a plate or a signal on an immunoassay, we have not spent nearly enough time understanding the first phases of the detection process, not only in terms of traditional methods but also with regard to the usefulness of any other methods that we have developed. Injury is still such a huge issue that we need to understand. I would argue that we have to know much more about the physiological status of the organism before standardizing any method.

Mark Carter: Well remember, people worry about deviating from a standard. I have always been really a strong opponent of saying, “Okay, here’s the standard, this is the baseline, and so that’s the least you have to do.” I think it is better to say that if there is a method that is better for your product or purposes, provided you can prove that it is equivalent or better than the reference method, then it’s open for use. The standard is the floor, as far as I am concerned, whether it is a regulatory, ISO or proprietary standard within a company. Let’s say Margaret finds a particular method that works really well for one of her company’s products. They should be able to use that as long as they have done their due-diligence and are able to prove that this method is going to be great for this product. Otherwise, you kill the incentive for people to do anything better; it’s going to be, “This is the baseline and it’s all I am going to do.”

Martin Wiedmann: I think one thing we haven’t mentioned on the topic of advances in food microbiology methods is molecular subtyping. Although it’s not the latest advance, it is certainly significant, and not just for PulseNet surveillance data but also because food companies and academia are now using it to understand transmission of foodborne pathogens. If we can determine how the pathogen gets there, we can better determine how to control it. We’ve made huge advances in PCR in the past decade, moving on to a level where most labs can actually use it.

Stan Bailey: Yes, when we’re talking about the screening methods for pathogens, we’ve had an evolution from ELISA and immunoassay methods to molecular methods such as polymerase chain reaction (PCR), through to real-time PCR and reverse-transcriptase PCR systems, which may allow for quantitation in some cases where the cell numbers are high enough. The fact is that everyone is being asked to do far more with less in the laboratory, so automation in general has been a significant advance in food microbiology. Specifically, automated pathogen detection systems that are self-contained so that you don’t have to have a separate room to do pathogen testing have increased efficiency in the lab.

There’s still a lot of room for improvement in screening methods, too, although there have been some advances in alternative screening tools based on optical detection techniques. Clearly, we know that about 10 to 20 times more indicator tests are run as compared with pathogen tests in the food lab, so anything you can do to screen for these indicators in a more efficient, automated manner will be an important advances coming along in the next few years.

Mark Carter: I think the big challenge we are facing is the integration of the traditional enrichment methods, rapid and automated methods, and newer methods that we can adopt from other sciences and apply to food microbiology testing to increase efficiencies. For example, you may use a fluorogenic detection system and the additional enrichment media you use has some fluorescent compound in it that gives rise to interference with the antigen expression. Now you have to modify the method. I think we are still running on parallel tracks in terms of using both traditional methods and rapid methods systematically. So when we try to integrate a traditional enrichment and a rapid method and then add molecular subtyping only to find that the enrichment might bias for specific subtypes, the promise of advanced detection capabilities is diminished.

By taking a systems view, we would need to take a step back and consider the whole testing process, from sample prep at the front end (what good is a 200 mL PCR tube if I can’t fit 50 grams of ground beef into that tube?) through advanced subtyping we may want to do at the other end to determine how we can optimize everything to work together. At present, we may be using a method that is 100 years old and some that are two years old and we are trying to make them fit together, and they don’t. We are better served by trying to understand their interactions, and to step back and integrate them all into one system. Of course, this is a big challenge.

Ultimately, we’re not doing a really good job at being quantitative, and I believe that’s a huge challenge coming on,, basically, using good statistics. I mean what is the significance of finding the same subtype here and there? Give me a p-value. Give me some probability. How likely is it that my product was responsible for a foodborne illness case considering everything from the method I use to doing a subtype?

Food micro-monitoring is traditionally very bad about doing statistics. Some people are doing a very good job but microbiologists in general don’t like stats. We have got to get over that and train our students. We have got to incorporate statistics into a lot of things, because we are really talking probabilities. We are making decisions about a lot of money, whether its recalls or anything else, and all without any good stats behind it.

Margaret Hardin: It is interesting to talk about this method or that method, but when the president of the company is e-mailing the lab every hour wanting to know when the results are coming in because there is so much product on hold, I am working in a reality in which there is little time to look for injured cells or argue the fine points of statistics.

At the end of the day, I want screening and detection methods that are fast, but that are also easy to use. In a food plant, you want the people working on the line and the people taking samples to be able to provide those to the lab with minimal risk of cross-contamination, and you want it to be easy to use in the lab. The detection system has to be reasonably priced because I can’t buy a $35,000 piece of equipment every two years that’s only going to test for one parameter. And again, although I recognize the importance of understanding the role of injured cells, but I don’t have time to look for them because I have 75,000 pounds of meat on hold with a regulator and corporate management looking on, and that sample is only as good as the piece I tested at that time.

I get phone calls all the time from test kit and detection system suppliers who ask, “If we cut two hours off of the enrichment time, will you buy our test?” There are a lot of things on my list about what I want to know about the test and not just about how rapid it is, because if results come in at 3:00 o’clock in the morning, the sanitation crew may not know how to read those results.

There is a role for standard culture methods, but as Mark just said, when we are trying to fit those into a rapid method box and they don’t all work together we may not be getting the full benefit of the results that are possible, and perhaps needed. I want to make sure that we are covering as much as we can with a rapid method, which is what I deal with mostly, and I don’t know if we are getting the accuracy, the sensitivity or the repeatability that we are getting with some of the gold standards and traditional methods.

Again, what role do injured cells play, and how much should I worry about that? How much can I worry about that? How reliable are the rapid methods that I am using? What is my confidence level when I make the decision to release product?

Julian Cox: You’re right, Margaret, and I think this idea of confidence levels takes us back to the importance of statistics and sampling. There is a textbook example in which a product is tested for Salmonella. We test using n = 60 and c = 0, but also test using n = 95 and c = 1 and the latter is actually better, statistically, in terms of confidence in the product. Statistically you’ve pulled out the one sample that has the organism in it, and therefore the rest of the product is safe. Realistically though, would you eat that food knowing that you had isolated Salmonella, Listeria, E. coli, any pathogen? Are you willing to accept the risk, assuming statistically that you have pulled the sample out the bag that contained the organism?

Martin Wiedmann: Well, that’s the one that will end up in the newspaper.

Julian Cox: This is exactly what I’m saying. That statistically, if you’ve tested 60 samples and all are negative, you make the decision to release the product. But, you can argue, statistically, that batch is actually less safe than the one where you tested the 95 samples. Of course, like Martin has said, if someone got sick from the product tested using c = 1, then you would be front-page news.

Joseph Odumeru: And that is one of the reasons why some companies send just one sample to the lab and make a decision to release product based on that one result. They don’t understand the statistics.

Mark Carter: Yes, but if my company is sending out only one sample for testing and I am going to make a decision based on just that I may as well have just waved a rabbit’s foot over it.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!